Abstract

The Yule–Simon distribution is usually employed in the analysis of frequency data. As the Bayesian literature, so far, has ignored this distribution, here we show the derivation of two objective priors for the parameter of the Yule–Simon distribution. In particular, we discuss the Jeffreys prior and a loss-based prior, which has recently appeared in the literature. We illustrate the performance of the derived priors through a simulation study and the analysis of real datasets.

Similar content being viewed by others

1 Introduction

In this work we aim to fill a gap in the Bayesian literature by proposing two objective priors for the parameter of the Yule–Simon distribution. The distribution was firstly discussed in Yule (1925) and then re-proposed in Simon (1955), and can be used in scenarios where the center of interest is some sort of frequency in the data. For example, Yule (1925) used it to model abundance of biological genera, while Simon (1955) exploited the distribution properties to model the addition of new words to a text. It goes without saying that other areas of applications can be considered where, for instance, frequencies represent the elementary unit of observation. For example, Gallardo et al. (2016) highlight that the heavy-tailed property of the Yule–Simon distribution allows for extreme values even for small sample sizes. In particular, they claim that the above property is suitable to model short survival times which, due to the nature of the problem, happen with relatively high frequency. In this paper we show the employment of the Yule–Simon distribution in modelling daily increments of social network stock options, surnames and ’superstar’ success in the music industry.

Despite the wide range of applications, the literature on the Yule–Simon distribution appears to be limited. And, more surprisingly, to the best of our knowledge it seems that no attention has been given to the problem by the Bayesian community. Leisen et al. (2017) propose an explicit Gibbs sampling scheme when a Gamma prior is chosen for the shape parameter and illustrate this approach with an application to text analysis and count data regression. Given the challenges that classical inference faces in estimating the parameter of the distribution (Garcia Garcia 2011), the possibility of tackling the problem from a Bayesian perspective is, undoubtedly, appealing.

In addressing the estimation of the shape parameter of the Yule–Simon distribution by means of the Bayesian framework, we opted for an objective approach. We propose two priors: the first is the Jeffreys rule prior (Jeffreys 1961), while the second is obtained by applying the loss-based approach discussed in Villa and Walker (2015). We would like to highlight that the purpose of this work is to present a Bayesian objective inferential procedure for the Yule–Simon distribution, without discussing whether the model is the best choice to represent some given set of observation. Although we formally introduce the Yule–Simon distribution and its derivation in the next Section, it is important to give an anticipation of the general idea here, so to fully appreciate the gain in adopting an objective approach. As nicely illustrated in Chung and Cox (1994), the shape parameter of the distribution is linked via a one-to-one transformation to the probability that the next observation will not take a value previously observed. For example, if we have observed n words in a text, we wish to make inference on the probability that the \((n+1)\) observation is a word not yet encountered in the text, assuming this probability to be constant. It is then clear that the Yule–Simon distribution models extremely large events. As such, the information in the data about these events is limited and a “wrongly” elicited prior could end up dominating the data. On the other hand, a prior with minimal information content would allow the data “to speak”, resulting in a more robust inferential procedure. We do not advocate that in every circumstance an objective approach is the only suitable. In fact, if reliable prior information is available, an elicited prior would represent, in general, the natural choice. Alas, in the presence of phenomena with extremely rare events, the above information is often insufficient or incomplete, and an objective choice would then represent the most sensible one.

The paper is organized as follows. In Sect. 2 we set the scene by introducing the Yule–Simon distribution and the notation that will be used throughout the paper. The proposed objective priors are derived and discussed in Sect. 3. Section 4 collects the analysis of the frequentist performances of the posterior distributions yielded by the proposed priors. Through a set of several simulation scenarios, we compare and analyse the inferential capacity of the objective priors here discussed. In Sect. 5 we illustrate the application of the priors to three real-data applications. Finally, Sect. 6 is reserved to concluding remarks and points of discussion.

2 Preliminaries

The most known functional form of the Yule–Simon distribution, possibly, is the following:

where \(\text{ B }(\cdot ,\cdot )\) is the beta function and \(\rho \) is the shape parameter. Note that the expectation of the Yule–Simon distribution exists for \(\rho >1\) and is equal to \(\rho /(\rho -1)\). The distribution in (1) was firstly proposed by Yule (1925) in the field of biology; in particular, to represent the distribution of species among genera in some higher taxon of biotic organisms. More recently, Simon (1955) noticed that the above distribution can be observed in other phenomena, which appear to have no connection among each others. These include, the distribution of word frequencies in texts, the distribution of authors by number of scientific articles published, the distribution of cities by population and the distribution of incomes by size. The derivation process followed by Yule (1925) was based on word frequencies, and it consisted of two assumptions:

-

(i)

The probability that the \((n+1)\)-th word is a word observed k times in the first n words, is proportional to k; and

-

(ii)

The probability that the \((n+1)\)-th word is new (i.e. not being observed in the first n words) is constant and equal to \(\alpha \in (0,1)\).

Yule (1925) shows that, under the condition of stationarity, the process defined by the above two assumptions yields (1) by setting \(\rho = 1/(1-\alpha )\), obtaining:

An important consequence of the above assumption (ii) is that the shape parameter \(\rho \) of the distribution takes values in \((1,+\infty )\). In other words, should we use the model as in Yule (1925), which includes the possibility that \(0<\rho \le 1\), we would loose the interpretation of the generating process described by the two assumptions above. In fact, for \(\rho <1\), the probability of observing a new word, expressed by \(\alpha \), would be negative; while for \(\rho =1\) the probability would be zero, rendering the process trivial (i.e. all the observed words will be equal to the first one observed). Furthermore, the expectation of the Yule–Simon distribution is defined only for values of the shape parameter larger than one, and this property is something one would expect in most applications. For all the above reasons, in this work we focus on the parametrization of the Yule–Simon given in (2), that is we will discuss prior distributions for \(\alpha \).

In addition to the parametrization of the Yule–Simon distribution as in (2), we will also consider the possibility of having the parameter \(\alpha \) discrete. This is a common finding in literature, especially when implementations of the model are considered. See, for example, Simon (1955) and Garcia Garcia (2011). The discretization of \(\alpha \) will be discussed in detail in Sect. 3.2.

3 Objective priors for the Yule–Simon distribution

This section is devoted to the derivation of two objective priors for the Yule–Simon distribution: the Jeffreys prior and loss-based prior. The former assumes that parameter space of \(\alpha \) is continuous and it is based on the well-known invariance property proposed by Jeffreys (1961); the latter assumes the parameter space discrete and is based on Villa and Walker (2015). Based on the previous description of the Yule–Simon distribution, we consider the following Bayesian model,

where \(f(k;\alpha )\) is the Yule–Simon distribution described in 2 and \(\pi (\alpha )\) is the objective prior distribution of the parameter of interest \(\alpha \), either the Jeffreys or the loss-based prior.

The likelihood function of the above model, conditionally to the parameter \(\alpha \), is the following:

where \(\mathbf k =(k_1,\ldots ,k_n)\) is the vector of observations. In order to compute the Bayesian analysis of the model, we consider the posterior distribution for \(\alpha \):

where \(\pi (\alpha )\) is the objective prior as described in the following part of the section.

3.1 The Jeffreys prior

The Jeffreys prior is defined in the following way (Jeffreys 1961):

where \(\mathcal {I}(\alpha )=\mathbb {E}_{\alpha }\biggl [-\frac{\partial ^{2}\log (f(k;\alpha ))}{\partial \alpha ^{2}} \biggr ]\) is the Fisher Information. In the next Theorem (which proof is in the Appendix) an explicit expression of the Jeffreys prior for the Yule–Simon distribution is provided.

Theorem 3.1

Let \(f(k;\alpha )\) be the Yule–Simon distribution defined in Eq. (2), with \(0<\alpha <1\). The Jeffreys prior for \(\alpha \) is

where

with \(_{3}F_{2}\) being the hypergeometric distribution function.

The Jeffreys prior stated in Theorem 3.1 is a proper prior. In fact, let

where

is the normalizing constant of \(\pi (\alpha )\).

It is not difficult to prove that

Indeed,

The result above follows from the following inequality

The properness of the prior in (3) ensures the properness of the yielded posterior distribution for \(\alpha \), as such suitable for inference.

3.2 The loss-based prior

Villa and Walker (2015) introduced a method for specifying and objective prior for discrete parameters. The idea is to assign a worth to each parameter value by objectively measuring what is lost if the value is removed, and it is the true one. The loss is evaluated by applying the well known result in Berk (1966) stating that, if a model is misspecified, the posterior distribution asymptotically accumulates on the model which is the nearest to the true one, in terms of the Kullback–Leibler divergence.

Given that the parameter \(\alpha \in (0,1)\) of the Yule–Simon is in principle continuous, the above method can not be applied. However, the boundedness of the interval allows for an easy discretization, directly we can consider the set

Therefore, the worth of the parameter value \(\alpha \) is represented by the Kullback–Leibler divergence \(D_{KL}(f(k|\alpha )\Vert f(k|\alpha ^\prime ))\), where \(\alpha ^\prime \ne \alpha \) is the parameter value that minimizes the divergence. To link the worth of a parameter value to the prior mass, Villa and Walker (2015) use the self-information loss function. This particular type of loss function measures the loss in information contained in a probability statement (Merhav and Feder 1998). As we now have, for each value of \(\alpha \), the loss in information measured in two different ways, we simply equate them obtaining the loss-based prior of Villa and Walker (2015):

where

As the discretized parameter space is finite, no matter what value of M one chooses, the prior (4) is proper, hence, the yielded posterior will be proper as well.

An important aspect is that the value \(\alpha ^\prime \) minimizing the Kullback–Leibler divergence can not be analytically determined, and the prior has to be computationally derived. However, even for large values of M, the computational cost is trifling compared to the whole Monte Carlo procedure necessary to simulate from the posterior distribution.

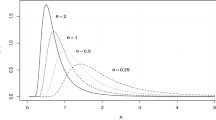

To have a feeling of the prior distributions derived above, we have plotted them in Fig. 1. The behaviour of the priors is similar, in the sense that they tend to increase as \(\alpha \) increases.

4 Simulation study

The objective priors defined in Sect. 3 are automatically derived by taking into consideration properties intrinsic to the Yule–Simon distribution. In other words, they do not depend on experts knowledge or previous observations. It is therefore necessary, in order to validate them, to assess the goodness of the priors by making inference on simulated data. This section is dedicated in performing a simulation study on the parameter \(\alpha \) using observations obtained from fully known distributions.

We have considered different sample sizes, \(n=30\), \(n=100\) and \(n=500\), to analyse the behaviour of the prior distributions under different level of information coming from the data. Here we show the results for \(n=100\) only, as the sole differences in using \(n=30\) and \(n=500\) sample sizes are limited to the precision of the inferential results: relatively low for \(n=30\) and relatively high for \(n=500\), as one would expect. Besides that, the differences in the performance of the two priors noted for \(n=100\) remain for the other sample sizes. As the loss-based prior depends on the discretization of the parameter space, for illustration purposes, we have considered \(M=10\) and \(M=20\), that is \(\alpha \in \{0.1,0.2,\ldots ,0.9\}\) and \(\alpha \in \{0.05,0.10,\ldots ,0.95\}\), respectively.

Both the Jeffreys prior and the loss-based prior yield posterior distributions for \(\alpha \) which are not analytically tractable, hence, it is recommendable to use Monte Carlo methods. The reasons behind this choice are discussed in the following two remarks.

Remark 1

In the case of the Jeffreys’ prior, one may use univariate numerical integration to compute quantities of interest, such as mean and credible intervals. However, in our experience, numerical integration may fail when the complexity on the integrand increases.

Remark 2

For the Loss based prior, one may be tempted to compute the posterior probabilities as

However, the Kullback-divergence in Eq. (4) may take values close to zero, leading to probabilities which can be interpreted as zero by the computational software employed. This is particularly true for moderately large values of M. Therefore, a Metropolis–Hastings in the logarithmic scale allows to overcome the above problem, where we choose a proposal transition kernel for the parameter \(\alpha \) for both the priors. The proposal kernel is a Beta distribution, \(\mathcal {B}e(a,b)\), with \(a=b=0.5\) for the Jeffreys’ prior, while it is a discrete uniform distribution \(\text{ DU }(1/M, M-1/M)\) for the loss-based prior.

We have generated 100 samples from a Yule–Simon distribution with the parameter \(\alpha \) set to every value in the parameter space, 9 for \(M=10\) and 19 for \(M=20\). For each sample we have simulated from the posterior distribution of \(\alpha \), under both priors, by running 10,000 iterations, with a burn-in period of 2000 iterations.

To evaluate the priors we have considered two frequentist measures. The first is the frequentist coverage of the 95% credible interval. That is, for each posterior, we compute the interval between the 0.025 and 0.975 quantiles and see if the true value of \(\alpha \) is included in it. Over repeated samples, one would expect a proportion of about 95% of the posterior intervals to contain the true parameter value. The second frequentist measure gives an idea of the precision of the inferential process, and it is represented by the square root of the mean squared error (MSE) from the mean, relative to the parameter value: \(\sqrt{\text{ MSE }(\alpha )}/\alpha \). We have considered the MSE from the median as well but, due to the approximate symmetry of the posterior, the results are very similar to the MSE from the mean. Figure 2 details the results for the simulations with \(n=100\) and a parameter space for \(\alpha \) discretized with increments of 0.1, that is \(\alpha \in \{0.1,0.2,\ldots ,0.9\}\). If we compare the coverage, we note that the loss-based prior tends to over-cover the credible interval, while the Jeffreys prior, although shows a better coverage for values of \(\alpha <0.5\), deteriorates in performance as the parameter tends to the upper bound of its space. Looking at the MSE, both priors appear to have very similar performance, and the (relative) error tends to decrease and \(\alpha \) increases. In Fig. 3 we have compared the frequentist performance of the Jeffreys prior with the loss-based prior defined over a more densely discretized parameter space, i.e. \(\alpha =\{0.05,0.10,\ldots ,0.95\}\). We note a smoother behaviour of the priors compared to Fig. 2, which is obviously due to the denser characterization considered. The coverage still reveals a tendency of the loss-based prior to over-cover, although less pronounced than the previous case. Jeffreys prior does not present any significant difference from the previous case, as one would expect. For what it concerns the MSE, the differences between the two priors are negligible, and the only aspect we note, as mentioned above, is a smoother decrease of the error as the parameter increases.

Frequentist properties of the Jeffreys prior (dashed line) and the loss-based prior (continuous line) for \(n=100\). The loss-prior is considered on the discretized parameter space with \(M=10\). The left plot shows the posterior frequentist coverage of the 95% credible interval, and the right plot represents the square root of the MSE from the mean of the posterior, relative to \(\alpha \)

Frequentist properties of the Jeffreys prior (dashed line) and the loss-based prior (continuous line) for \(n=100\). The loss-prior is considered on the discretized parameter space with \(M=20\). The left plot shows the posterior frequentist coverage of the 95% credible interval, and the right plot represents the square root of the MSE from the mean of the posterior, relative to \(\alpha \)

We look more into the details of the objective approach by analysing two i.i.d. samples. In particular, we consider a random sample of size \(n=100\) from a Yule–Simon distribution with \(\alpha =0.40\) and a sample, of the same size, from a Yule–Simon with \(\alpha =0.68\).

In both cases, we have sampled from the posterior distribution via Monte Carlo methods with 10,000 iterations and a burn-in period of 2000 iterations. Figure 4 shows the posterior samples and posterior histograms derived by applying the Jeffreys prior and the loss-based prior with two different discretizations, that is \(M=10\) and \(M=20\). The summary statistics of the three posteriors are reported in Table 1, where we have the mean, the median, and the 95% credible interval. By comparing the mean of the posterior distributions, we see that they are all centered around the true parameter value. The credible interval yielded by the loss-based priors with the most dense discretization (\(M=20\)) is larger than the other two intervals. However, the difference is very small and we can conclude that the three prior distributions result in posteriors which carry the same uncertainty. In other words, the three objective priors perform in the same way.

Similar considerations can be made for the case where we have sampled \(n=100\) observations from a Yule–Simon distribution with \(\alpha =0.68\). By inspecting Fig. 5 and Table 2, we note a very similar behaviour of the three priors, in the sense that the posterior distributions are still centered around the true value of \(\alpha \) and that the credible intervals do not present important differences. Note that the choice of a true parameter value which would have not been included in any of the two discretized sample spaces, upon which the loss-prior is based, allows to show that the inferential process appears to be not affected by the discretization, hence motivating it.

To conclude, the simulation study shows no tangible differences in the performance of the prior distributions, in the spirit of objective Bayesian analysis.

5 Real data application

To illustrate the proposed priors, both the Jeffreys and the loss-based prior for the Yule–Simon distribution, we analyze three datasets. The first dataset concerns daily increments of four popular social networks stock indexes in the US market, the second contains the frequencies of surnames observed in the 1990 US Census, and the last dataset consists of ’number one’ hits in the US music industry. Note that the convergence diagnostics for the last two datasets are illustrated in Appendix.

5.1 Social network stock indexes

We analyze different data in the social media marketing, in particular we focus on Facebook, Twitter, Linkedin and Google. These four major companies are the most powerful social networks in the world and are listed in the Wall Street exchange market (http://finance.yahoo.com). We analyze the daily increments for the stocks and, in particular, we consider the adjusted closing price from the 1st of October 2014 to the 11th of March 2016, for a total of \(n=365\) observations. The daily increments are obtained by applying \(z_{t}=\left| r_{t}/r_{t-1}-1\right| \cdot 100\), for \(t=2,\ldots , 365\), where \(r_{t}\) is the adjusted closing price for the index at day t, and we built our frequency on it. These are shown in Fig. 6, while Fig. 7 shows the histogram of the frequencies of the discretized data. The discretization has been done by counting the number of times a daily return took a value truncated at the second decimal digit. For example, if two observed daily returns are 1.2494 and 1.2573, they were both considered as two occurrences of the same value. By inspecting the histograms in Fig. 7 it seems that the (transformed) Yule–Simon distribution might be a suitable statistical model to represent the data. Although the daily increments are time dependent, the autocorrelation function and partial autocorrelation function plots (Fig. 8) show that the data can be treated as if it were an i.i.d. sample. In fact, the plots highlight the absence of significant serial correlation with the lag series. We apply the Bayesian framework and obtain the posterior distribution for the parameter of interest as

where \(\mathbf k =(k_1,\ldots ,k_n)\) represents the set of observations, i.e. the frequencies of the discretized daily returns, \(L(\mathbf k |\alpha )\) the likelihood function and \(\pi (\alpha )\) the prior distribution which, in turn, has the form of the Jeffreys prior in (3) or the loss-based prior (4). We have obtained the posterior distributions for the parameter \(\alpha \) of the transformed Yule–Simon distribution by Monte Carlo methods. We run 25,000 iterations with a burn-in period of 5000 iterations. We have reported the chain and the histogram of the posterior distributions in Fig. 9 and in Fig. 10, with the corresponding summary statistics in Table 3. Note that, with the purpose of limiting the amount of space used, we have included the plots of the Facebook and Google daily returns only.

For all the four assets we notice that the results for \(\alpha \) are very similar, as can be inferred by the minimal (or absence of) difference between the means and the medians. The credible intervals, as well, are very similar, with a slight larger size for the case where the loss-based prior with (\(M=20\)) is applied. One way of interpreting the results is as follows. The parameter \(\alpha \) can be seen as the probability that the next observation is different from the ones observed so far, and therefore we note that Twitter has the highest chance to take a daily increment not yet observed, while Google has the smallest.

5.2 Census data: surname analysis

The second example we examine concerns with the frequency of surnames in the US (http://www.census.gov/en.html). From the population censuses (Maruka et al. 2010), we focus on the US Census completed in 1990 and consider the first 500 most common surnames. Refer to Table 4 for a list of the first 10 most frequent surnames. Briefly, the process followed by Maruka et al. (2010) to obtain the data converts the surname with Senior (SR), Junior (JR) or a number in the last name field (f.e. Moore Sr or Moore Jr or Moore III are converted to Moore) and, in addition, the authors examined each name entry for the possibility of an inversion (e.g. a first name appearing in the last name fields or vice-versa). However, as there is the possibility of having many surnames that also inverted can sound absolutely right, the authors considered also the surname of the spouse, obtaining additional information to invert the name field of the entire family.

The analysis has been performed by running both the Markov Chain Monte Carlo for 25,000 iterations, with a burn-in of 5000 iterations.

The posterior samples and the posterior histograms are shown in Fig. 11, with the corresponding summary statistics of the posterior distributions reported in Table 5. We again notice similarities to the simulation study and the analysis of daily increments, in the sense that means and medians are very similar for each prior, and the 95% credible interval obtained by applying the loss-based prior with \(M=20\) is slightly larger than the one obtained by using either the Jeffreys prior or the loss-based prior with \(M=10\).

The estimated value of \(\alpha \), on the basis of the 500 most common surnames in the US (and if we consider the mean) is, roughly, 1 / 2. In other words, there are about 50% chances that the next observed surname is not in the list of the 500. Obviously, a larger sample size would yield a smaller posterior mean, as the number of surnames is finite and the more we observe, the harder is to find a “new” one.

5.3 ‘Superstardom’ analysis

The last example consists in modelling the number of ‘number one’ hits a music artist had in the period 1955–2003 on the Billboard Hot 100 chart. The data, which is displayed in Table 6, has been used by Chung and Cox (1994) and Spierdijk and Voorneveld (2009) to show an apparent absence of correlation between talent and success in the music industry.

We have run the Monte Carlo simulation for 25,000 iterations, with a burn in period of 5000, for each of the considered priors. The posterior samples and histograms are shown in Fig. 12, with the correspondinf statistic summaries in Table 7.

This example of the music hits allows for some interesting points of discussion. First, we note that the posterior distributions of for \(\alpha \) are skewed; therefore, the posterior median represents a better centrality index than the posterior mean. Second, it is clear that the “true” value of \(\alpha \) may be close to zero. As such, in order to explore better the parameter space when the loss-based prior is used, a denser discretization is more appropriate. We have then considered \(M=100\), resulting the posterior summary statistics in Table 7. We note now that the posterior median is similar to the one obtained using the Jeffreys prior. It is therefore recommendable that, when the inference on \(\alpha \) indicates values near the parameter space boundaries, the level of discretization to be considered should be relatively dense.

6 Discussions

It is surprising how, from time to time, the Bayesian literature presents gaps even for problems which appear to be straightforward. The Yule–Simon distribution has undoubtedly many possibilities of application, as the discussed examples and the refereed papers show, and therefore demanded for a satisfactory discussion within the Bayesian framework.

Given the importance that objective Bayesian analysis can have in applications, and not only (Berger 2006), we have presented two priors which are suitable in scenarios with minimal prior information. The first prior is the Jeffreys prior which, as it is well known, has the appealing property of being invariant under monotone differentiable transformations of the parameter of interest. The second prior is derived considering the loss in information one would incur if the ‘wrong’ model was selected. Although the latter requires a discretization of the parameter space, we have shown through simulation studies that the performance of the yielded posterior are very similar, both between the Jeffreys and the loss-based prior, and between different structures of the discretized parameter space. This is not surprising as both priors, i.e. the Jeffreys and the loss-based, have a similar behaviour, in the sense that they increase as the parameter \(\alpha \) increases. Given that the performance of the prior is virtually the same, one may choose a preferred one on other grounds. For example, the Jeffreys’ prior presents the property of being invariant under reparametrisation, while the loss-based prior is computationally more efficient.

We have limited our analysis to the case where the shape parameter of the Yule–Simon distribution, \(\rho \), is strictly larger than one. Doing so, we allow for a more convenient parametrization of the distribution where the new parameter \(\alpha =(\rho -1)/\rho \) has the interpretation of being the probability that the next observation takes a value not observed before.

Besides through a simulation study, we have compared the objective priors by applying them on three data sets: the first related to financial data, the second to surnames in the US and the third one on the number of hits in the music industry. All comparisons allowed to show that the two proposed objective priors lead to similar results, in terms of posterior distributions. For obvious reasons, we have not considered if the choice of the Yule–Simon is the best model to represent the data, but limited our analysis to make inference for the unknown parameter \(\alpha \).

References

Berger J (2006) The case for objective bayesian analysis. Bayesian Anal 1:385–402

Berk R (1966) Limiting behaviour of posterior distributions when the model is incorrect. Ann Math Stat 37:51–58

Chung K, Cox R (1994) A stochastic model of superstardom: an application of the Yule distribution. Rev Econ Stat 76:771–775

Gallardo DI, Gomex HW, Bolfarine H (2016) A new cure rate model based on the Yule--Simon distribution with application to a melanoma data set. J Appl Stat. doi:10.1080/02664763.2016.1194385

Garcia Garcia JM (2011) A fixed-point algorithm to estimate the Yule–Simon distribution parameter. Appl Math Comput 217:8560–8566

Geweke J (1992) Evaluating the accuracy of sampling-based approaches to calculating posterior moments. In: Bernardo JM, Berger J, Dawid AP, Smith JFM (eds) Bayesian statistics 4. Oxford University Press, Oxford, pp 169–193

Gradshteyn I, Ryzhik I (2007) Table of integrals, series and products. Academic, New York

Jeffreys H (1961) Theory of probability. Oxford University Press, London

Leisen F, Rossini L, Villa C (2017) A note on the posterior inference for the Yule-Simon distribution. J Stat Comput Simul 87(6):1179–1188

Maruka YE, Shnerb NM, Kessler DA (2010) Universal features of surname distribution in a subsample of a growing population. J Theor Biol 262:245–256

Merhav N, Feder M (1998) Universal prediction. IEEE Trans Inf Theory 44:2124–2147

Plummer M, Best N, Cowles K, Vines K (2006) CODA: convergence diagnosis and output analysis for MCMC. R News 6:7–11

Simon HA (1955) On a class of skew distribution functions. Biometrika 42:425–440

Spierdijk L, Voorneveld M (2009) Superstars without talent? The Yule distribution controversy. Rev Econ Stat 91:648–652

Villa C, Walker S (2015) An objective approach to prior mass function for discrete parameter spaces. J Am Stat Assoc 110:1072–1082

Yule GU (1925) A mathematical theory of evolution, based on the conclusion of Dr. J.C. Willis. Philos Trans R Soc B 213:21–87

Acknowledgements

Fabrizio Leisen was supported by the European Community’s Seventh Framework Programme [FP7/2007-2013] under Grant Agreement No: 630677.

Author information

Authors and Affiliations

Corresponding author

Appendices

A Appendix

Proof of Theorem 3.1

First of all, we note that

where \(\psi ^{(i)}\) is the polygamma function:

It’s easy to see that

and

Therefore, we have that the Fisher information is:

In order to compute the Jeffreys prior, we need compute the two expected value of equation A.1 separately.

The second summation with respect to k in Eq. A.2 can be rewritten as:

Finally, we have that:

where the summation \(\sum _{j=1}^{\infty } (\frac{1}{1-\alpha })B(j,\frac{1}{1-\alpha }+1)=1\), since we are summing over all the possible values of the probability function of the Yule–Simon distribution.

As we have done with the first expected value of A.1, now we compute the second expected value of Eq. A.1:

where the last equality follows from (A.3). Finally we obtain the following form:

The Eq. A.6 can be written in a more simple way as a function of an Hypergeometric function.

Looking at the summation we have:

But the denominator can be written as a ratio of Pochhammer representations:

Hence the equation A.8 is written as:

where \(_{2}F_{1}(\alpha ,\beta ,\gamma ,x)\) is the hypergeometric function. So we have that equation A.7 can be rewritten as:

where the last equality follows from 7.512.5 of Gradshteyn and Ryzhik (2007). Summing up,

and this concludes the proof. \(\square \)

B Convergence analysis

In this section of the appendix, we describe the converge analysis we have performed for the Census surname dataset and the ‘Superstardom’ hits data set.

The convergence analysis for the Census and ‘Superstardom’ datasets has been done using the R Coda Package (Plummer et al. 2006). In particular, we have computed the Geweke convergence test, the Gelman–Rubin’s plot and test statistics, the autocorrelation and partial autocorrelation function. Tables 8 and 9 report the results of the Geweke’s convergence test (Geweke 1992) for the two datasets. The test statistics show no convergence issues. To perform the Gelman–Rubin test of convergence, we have run multiple chains with sparse starting points. Figure 13 plots the srinking factor for the Census data set, while Fig. 15 shows the factor for the ‘Superstardom’ data set. In both cases we do not see any indication of failed convergence under each objective prior. As a final check, we have plotted the autocorrelation function and the partial autocorrelation function for both datasets under each prior. These plots are shown in Figs. 14 and 16. We again see no indication of convergence problems.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Leisen, F., Rossini, L. & Villa, C. Objective bayesian analysis of the Yule–Simon distribution with applications. Comput Stat 33, 99–126 (2018). https://doi.org/10.1007/s00180-017-0735-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-017-0735-1