Abstract

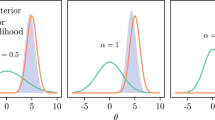

Standard Bayesian analyses can be difficult to perform when the full likelihood, and consequently the full posterior distribution, is too complex or even impossible to specify or if robustness with respect to data or to model misspecifications is required. In these situations, we suggest to resort to a posterior distribution for the parameter of interest based on proper scoring rules. Scoring rules are loss functions designed to measure the quality of a probability distribution for a random variable, given its observed value. Important examples are the Tsallis score and the Hyvärinen score, which allow us to deal with model misspecifications or with complex models. Also the full and the composite likelihoods are both special instances of scoring rules. The aim of this paper is twofold. Firstly, we discuss the use of scoring rules in the Bayes formula in order to compute a posterior distribution, named SR-posterior distribution, and we derive its asymptotic normality. Secondly, we propose a procedure for building default priors for the unknown parameter of interest that can be used to update the information provided by the scoring rule in the SR-posterior distribution. In particular, a reference prior is obtained by maximizing the average \(\alpha \)-divergence from the SR-posterior distribution. For \(0 \le |\alpha |<1\), the result is a Jeffreys-type prior that is proportional to the square root of the determinant of the Godambe information matrix associated with the scoring rule. Some examples are discussed.

Similar content being viewed by others

References

Barndorff-Nielsen OE (1976) Plausibility inference. J R Stat Soc B 38:103–131

Basu A, Harris IR, Hjort NL, Jones MC (1998) Robust and efficient estimation by minimising a density power divergence. Biometrika 85:549–559

Berger JO (2006) The case for objective Bayesian analysis. Bayesian Anal 1:1–17

Berger JO, Bernardo JM (1992) On the development of reference priors (with discussion). In: Bernardo JM, Berger JO, Dawid AP, Smith AFM (eds) Bayesian statistics. Oxford University Press, Oxford

Berger JO, Bernardo JM, Sun D (2009) The formal definition of reference priors. J Am Stat Assoc 107:636–648

Berger JO, Bernardo JM, Sun D (2012) Overall objective priors. Bayesian Anal 10:189–221

Bernardo JM (1979) Reference posterior distributions for Bayesian inference (with discussion). J R Stat Soc B 41:113–147

Bernardo JM (2005) Reference analysis. In: Dey DK, Rao CR (eds) Handbook of Bayesian statistics, vol 25. Elsevier, North-Holland, pp 17–90

Brier GW (1950) Verification of forecasts expressed in terms of probability. Mon Weather Rev 78:1–3

Chandler RE, Bate S (2007) Inference for clustered data using the independence loglikelihood. Biometrika 94:167–183

Chang I, Mukerjee R (2008) Bayesian and frequentist confidence intervals arising from empirical-type likelihoods. Biometrika 95:139–147

Corcuera JM, Giummolè F (1998) A characterization of monotone and regular divergences. Ann Inst Stat Math 50:433–450

Datta GS, Mukerjee R (2004) Probability matching priors: higher order asymptotics. Lecture Notes in Statistics 178. Springer

Dawid AP (1986) Probability forecasting. In: Kotz S, Johnson NL, Read CB (eds) Encyclopedia of statistical sciences. Wiley, New York, pp 210–218

Dawid AP (2007) The geometry of proper scoring rules. Ann Inst Stat Math 59:77–93

Dawid AP, Lauritzen SL (2005) The geometry of decision theory. In: Proceedings of the second international symposium on information geometry and its applications, pp 22–28. University of Tokyo

Dawid AP, Musio M (2014) Theory and applications of proper scoring rules. Metron 72:169–183

Dawid AP, Musio M (2015) Bayesian model selection based on proper scoring rules (with discussion). Bayesian Anal 10:479–521

Dawid AP, Musio M, Ventura L (2016) Minimum scoring rule inference. Scand J Stat 43:123–138

Farcomeni A, Ventura L (2012) An overview of robust methods in medical research. Stat Methods Med Res 21:111–133

Godambe VP (1960) An optimum property of regular maximum likelihood estimation. Ann Math Stat 31:1208–1211

Good IJ (1952) Rational decisions. J R Stat Soc B 14:107–114

Ghosh M (2011) Objective priors: an introduction for frequentists. Stat Sci 26:187–202

Ghosh M, Basu A (2013) Robust estimation for independent non-homogeneous observations using density power divergence with applications to linear regression. Electron J Stat 7:2420–2456

Ghosh M, Basu A (2016) Robust Bayes estimation using the density power divergence. Ann Inst Stat Math 68:413–437

Ghosh M, Mergel V, Liu R (2011) A general divergence criterion for prior selection Ann Inst Stat Math 43–58

Ghosh JK, Mukerjee R (1991) Characterization of priors under which Bayesian and frequentist Bartlett corrections are equivalent in the multi-parameter case. J Mult Anal 38:385–393

Greco L, Racugno W, Ventura L (2008) Robust likelihood functions in Bayesian inference. J Stat Plan Inf 138:1258–1270

Hampel FR, Ronchetti EM, Rousseeuw PJ, Stahel WA (1986) Robust statistics: the approach based on influence functions. Wiley, New York

Heritier S, Cantoni E, Copt S, Victoria-Feser MP (2009) Robust methods in biostatistics. Wiley, Chichester

Huber PJ, Ronchetti EM (2009) Robust statistics. Wiley, New York

Hyvärinen A (2005) Estimation of non-normalized statistical models by score matching. J Mach Learn Res 6:695–709

Hyvärinen A (2007) Some extensions of score matching. Comput Stat Data Anal 51:2499–2512

Jeffreys H (1961) Theory of probability. Oxford University Press, New York

Lazar NA (2003) Bayes empirical likelihood. Biometrika 90:319–326

Leisen F, Villa C, Walker SG (2017) On a global objective prior from score rules. arXiv: 1706.00599v1

Lin L (2006) Quasi Bayesian likelihood. Stat Methodol 3:444–455

Liu R, Chakrabarti A, Samanta T, Ghosh JK, Ghosh M (2014) On divergence measures leading to Jeffreys and other reference priors. Bayesian Anal 9:331–370

Machete R (2013) Contrasting probabilistic scoring rules. J Stat Plan Inf 143:1781–1790

Mameli V, Musio M, Ventura L (2018) Bootstrap adjustments of signed scoring rule root statistics. Comm Statist - Simul Comput 47:1204–1215

Mameli V, Ventura L (2015) Higher-order asymptotics for scoring rules. J Stat Plan Inf 165:13–26

Mardia KV, Jupp PE (2000) Directional statistics. Wiley, London

Mardia KV, Kent JT, Laha AK (2016) Score matching estimators for directional distributions. ArXiv:1604.08470v1

Musio M, Mameli V, Ruli E, Ventura L (2017) Bayesian Inference for directional data through ABC and homogeneous proper scoring rules. Proceeding of 61\(st\) ISI World Statistics Congress, to appear

Pace L, Salvan A, Sartori N (2011) Adjusting composite likelihood ratio statistics. Stat Sin 21:129–148

Parry M, Dawid AP, Lauritzen SL (2012) Proper local scoring rules. Ann Stat 40:561–592

Pauli F, Racugno W, Ventura L (2011) Bayesian composite marginal likelihoods. Stat Sin 21:149–164

Riani M, Atkinson AC, Perrotta D (2014) A parametric framework for the comparison of methods of very robust regression. Stat Sci 29:128–143

Ribatet M, Cooley D, Davison AC (2012) Bayesian inference from composite likelihoods, with an application to spatial extremes. Stat Sin 22:813–845

Ruli E, Sartori N, Ventura L (2016) Approximate Bayesian Computation with composite score functions. Stat Comput 26:679–692

Schennach S (2005) Bayesian exponentially tilted empirical likelihood. Ann Stat 35:634–672

Smith EL, Stephenson AG (2009) An extended Gaussian max-stable process model for spatial extremes. J Stat Plan Inf 139:1266–1275

Tsallis C (1988) Possible generalization of Boltzmann–Gibbs statistics. J Stat Phys 52:479–487

Varin C, Reid N, Firth D (2011) An overview of composite likelihood methods. Stat Sin 21:5–42

Ventura L, Cabras S, Racugno W (2010) Default prior distributions from quasi- and quasi-profile likelihoods. J Stat Plan Inf 140:2937–2942

Ventura L, Racugno W (2016) Pseudo-likelihoods for Bayesian inference. Topics on methodological and applied statistical inference, series studies in theoretical and applied statistics. Springer, Berlin, pp 205–220

Walker SG (2016) Bayesian information in an experiment and the Fisher information distance. Stat Probab Lett 112:5–9

Yang Y, He X (2012) Bayesian empirical likelihood for quantile regression. Ann Stat 40:1102–1131

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

1.1 Proof of Theorem 3.1

Proof

The posterior (5) can equivalently be written as

with \(\theta ^*=\tilde{\theta }+C(\theta -\tilde{\theta })\) and C fixed such that \(C^TK(\theta )C=G(\theta )\).

Let \(w=(w^1,\ldots ,w^d)^T=n^{1/2}(\theta -\tilde{\theta })\). Then, \(\theta =\tilde{\theta }+n^{-1/2}w\) and \(\theta ^*=\tilde{\theta }+n^{-1/2}Cw\). A posterior for w is

with

Now

with

where \(w^{ij\ldots }=w^iw^j\ldots \) is a product of components of w. Moreover,

where

The numerator in Bayes formula can thus be written as

The denominator can be approximated using the moments of the d-variate normal distribution \(N_d(0,\tilde{H}^{-1})\). We have that

being \(E(R_1(W))\) and \(E(R_3(W))\) both related to odd moments and thus equal to zero. Now,

and

Putting together expressions (16) and (17), we finally obtain the result with

and

\(\square \)

1.2 Proof of Theorem 4.1

Proof

The proof follows the same steps as in Liu et al. (2014), Section 3, generalized for the use of a SR-posterior (5) instead of the classic posterior distribution.

Let \(\pi (\theta )\) be a prior distribution for \(\theta \) and \(p(x|\theta )\) be the conditional distribution of X given \(\theta \). Thus, the marginal distribution of X can be written as \(p(x)=p(x|\theta )\pi (\theta )/\pi (\theta |x)\), with \(\pi (\theta |x)\) the conditional distribution of \(\theta \) given x. The functional (8) associated with an \(\alpha \)-divergence between the prior \(\pi (\theta )\) and the SR-posterior (5) can be written as

where \(E_\theta (\cdot )\) denotes expectation with respect to the conditional distribution of X given \(\theta \). For \(\alpha =0\) or 1, we need to interpret \(T(\pi )\) as its limiting value, when it exists.

Now, we apply the shrinkage argument for evaluating the conditional expectation in (18), \(E_\theta \left[ \pi _{SR}(\theta |X)^{1-\alpha }\pi (\theta |X)^{-1}\right] \). See Datta and Mukerjee (2004) for details on the shrinkage method.

Let us first consider the case of \(0<|\alpha |<1\).

The first step of the shrinkage method involves fixing a suitable prior distribution \(\bar{\pi }(\theta )\) and calculating the expected value of \(\pi _{SR}(\theta |x)^{1-\alpha }\pi (\theta |x)^{-1}\) with respect to the corresponding posterior \(\bar{\pi }(\theta |x)\), i.e. \(E^{\bar{\pi }}\left[ \pi _{SR}(\theta |x)^{1-\alpha }\pi (\theta |x)^{-1}|x\right] \). Notice that here we use the classic posterior based on the prior \(\bar{\pi }(\theta )\), i.e. the conditional distribution of \(\theta \) given x, \(\bar{\pi }(\theta |x)\). Using up to first order the asymptotic expansion (7) for \(\pi _{SR}(\theta |x)\) and similar expansions for \(\bar{\pi }(\theta |x)\) and \(\pi (\theta |x)\), we obtain

and

Since \(\tilde{H}\) tends to G as \(n\rightarrow \infty \), we have that

The second step of the shrinkage argument requires to integrate again with respect to the distribution of X given \(\theta \) and with respect to the prior \(\bar{\pi }(\theta )\), thus obtaining

Finally, by letting the prior \(\bar{\pi }(\theta )\) go to \(\theta \), we obtain

For \(0<|\alpha |<1\), by substituting (19) in (18) we can see that the selection of a prior \(\pi (\theta )\) corresponds to the minimization with respect to \(\pi (\theta )\) of the functional

Notice that the preceding expression can be interpreted as an increasing transformation of the \((-\alpha )\)-divergence between a density that is proportional to \(|G(\theta )|^{1/2}\) and the prior \(\pi (\theta )\). Thus, it is minimized if and only if the two densities coincide.

This result cannot be extended to the case of \(\alpha \ge 1\), as is evident from the right-hand side of (19). For \(\alpha <-1\), (20) turns out to be equivalent to a decreasing transformation of the \((-\alpha )\)-divergence between a density that is proportional to \(|G(\theta )|^{1/2}\) and the prior \(\pi (\theta )\). Thus, a maximizer for the expected \(\alpha \)-divergence in this case does not exist.

For \(\alpha \rightarrow 0\), following the same steps as for \(0<|\alpha |<1\), it can be easily shown that maximization of the average Kullback–Leibler divergence is asymptotically equivalent to minimization of

which is attained by choosing again a Jeffreys-type prior proportional to \( |G(\theta )|^{1/2}\). Indeed, the preceding expression is equal up to an additive constant to Kullback–Leibler divergence between a density that is proportional to \(|G(\theta )|^{1/2}\) and the prior \(\pi (\theta )\).

The case \(\alpha =-1\) corresponds to the Chi-square divergence. For this case the proof requires higher-order terms in the expansion of the scoring rule posterior distribution and also of both the conditional distributions of \(\theta \) given x calculated with respect to the priors \(\pi (\theta )\) and \(\bar{\pi }(\theta )\). Thus, we use (7) up to order \(O_p(n^{-3/2})\). In particular, let \(w=n^{1/2}(\theta -\tilde{\theta })\) and \(w_1=n^{1/2}(\theta -\hat{\theta })\), where \(\tilde{\theta }\) is the minimum scoring rule estimator and \(\hat{\theta }\) the maximum likelihood estimator for \(\theta \). The different posterior distributions for w and \(w_1\) given x can be written as

where \(A_1\) and \(A_2\) are defined as in Theorem 3.1, \(J^\ell (\theta )=(-\partial ^2\ell /\partial \theta \partial \theta ^T)/n\) is the observed information and \(A^\ell _1\), \(\bar{A}^\ell _1\), \(A^\ell _2\), \(\bar{A}^\ell _2\) are obtained by calculating \(A_1\) and \(A_2\) with the logarithmic score as scoring rule and \(\pi \) and \(\bar{\pi }\) as priors; for instance,

with \(\ell \) the log-likelihood and \(\ell _{ijk}\) its third-order derivatives. As usual, a tilde or a hat over a quantity indicate that it is calculated at \(\tilde{\theta }\) and \(\hat{\theta }\), respectively.

Following the shrinkage argument, we need first to evaluate

For doing this, we use the fact that

and

and we calculate the last expression in \(w_1=w+n^{1/2}(\tilde{\theta }-\hat{\theta })\).

After tedious calculations, we obtain

where \(\hat{\varvec{j}}^{ij}\) are the components of \((\hat{J}^\ell )^{-1}\) and \(R=R(\tilde{\theta },\hat{\theta })\) is a function that does not involve the prior nor its derivatives.

Now, let \(E_\theta (S_{ijk}/n)=B^S_{ijk}(\theta )+o(n^{-1/2})\), \(E_\theta (\ell _{ijk}/n)=B^\ell _{ijk}(\theta )+o(n^{-1/2})\) and recall that \(E_\theta (\tilde{H})=G(\theta )+o(n^{-1/2})\) and \(E_\theta (\hat{J}^\ell )=I(\theta )+o(n^{-1/2})\), where I is the expected information matrix. Moreover, note that \(E_\theta [(\tilde{\theta }-\hat{\theta })^i ]=o(n^{-1/2})\) and \(E_\theta [n(\tilde{\theta }-\hat{\theta })^{ij}]=g^{ij}+{\varvec{i}}^{ij}-2\sigma ^{ij}+o(n^{-1/2})\), where \(g_{ij}\) and \(g^{ij}\) are the components of G and \(G^{-1}\), respectively, \({\varvec{i}}^{ij}\) are the components of \(I^{-1}\) and \(\sigma ^{ij}=nCov_\theta (\tilde{\theta },\hat{\theta })^{ij}\). Furthermore, let \(a^S_j=B^S_{khl}c_{kj}c_{hs}c_{lt}g^{st}\), \(a^\ell _j=B^\ell _{jhk}{\varvec{i}}^{hk}\). By integrating again the above expression with respect to the distribution of X given \(\theta \), we get

where \(F=F(\theta )\) is a function that does not involve the prior nor its derivatives.

By integrating again with respect to the prior \(\bar{\pi }(\theta )\) and by letting the prior go to \(\theta \), we finally obtain the expected value needed in (18) for \(\alpha =-1\):

where \(M=M(\theta )\) is a function that does not involve the prior nor its derivatives.

Substituting (21) in (18) with \(\alpha =-1\), we find that maximization of the average Chi-square divergence is equivalent to maximization of

Let \(y=y(\theta )=(y_1(\theta ),\dots ,y_{d}(\theta ))^T\), with \(y_i(\theta )=\pi _i(\theta )/\pi (\theta )\), \(i=1,\ldots , {d}\), and let \(y'\) be the matrix of partial derivatives of y with respect to \(\theta \), i.e. \(y'=(y_{ij})_{ij}\), \(y_{ij}=\partial y_i/\partial \theta ^j\), \(i,j=1,\ldots { d}\). Then, \(\pi _{ij}/\pi =y_{ij}+y_iy_j\) and the quantity to be maximized can be rewritten as

Let us denote the integrand function by \(U(\theta ,y,y')\). The solution to the maximization problem is found by solving the system of Euler–Lagrange equations:

After some calculations, we obtain the solution to the variational problem as

where \(a^S=(a^S_1,\ldots ,a^S_{d})^T\), \(a^\ell =(a^\ell _1,\ldots ,a^\ell _{d})^T\), \(\Sigma =(\sigma ^{ij})_{ij}\), \(\Gamma =(G^{-1}-4I^{-1}+4\Sigma )^{-1}\), \(\nabla \cdot G^{-1}= (\partial g^{1j}/\partial \theta ^j, \ldots , \partial g^{{d} j}/\partial \theta ^j)^T\) and \(\nabla \cdot \Sigma = (\partial \sigma ^{1j}/\partial \theta ^j, \ldots , \partial \sigma ^{{d} j}/\partial \theta ^j)^T\). \(\square \)

Rights and permissions

About this article

Cite this article

Giummolè, F., Mameli, V., Ruli, E. et al. Objective Bayesian inference with proper scoring rules. TEST 28, 728–755 (2019). https://doi.org/10.1007/s11749-018-0597-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-018-0597-z

Keywords

- \(\alpha \)-divergences

- Composite likelihood

- Godambe information

- M-estimating function

- Reference prior

- Robustness

- Scoring rule