Abstract

Although the amount or scale of biographical knowledge held in store about a person may differ widely, little is known about whether and how these differences may affect the retrieval processes triggered by the person’s face. In a learning paradigm, we manipulated the scale of biographical knowledge while controlling for a common set of minimal knowledge and perceptual experience with the faces. A few days after learning, and again after 6 months, knowledge effects were assessed in three tasks, none of which concerned the additional knowledge. Whereas the performance effects of additional knowledge were small, event-related brain potentials recorded during testing showed amplitude modulations in the time range of the N400 component—indicative of knowledge access—but also at a much earlier latency in the P100 component—reflecting early stages of visual analysis. However, no effects were found in the N170 component, which is taken to reflect structural analyses of faces. The present findings replicate knowledge scale effects in object recognition and suggest that enhanced knowledge affects both early visual processes and the later processes associated with semantic processing, even when this knowledge is not task-relevant.

Similar content being viewed by others

A familiar person’s face is a cue for the retrieval of a rich set of stored knowledge. The face allows access to whatever information we have about biographical facts concerning this person, as well as to her or his name. Although the amount or scale of knowledge held in store about a person may differ widely, little is known about whether and how these differences may affect the retrieval processes triggered by the person’s face. Using a learning paradigm, in the present study we investigated how face perception and person recognition are affected by the scale of the biographical knowledge available about a person. Learning included a common set of minimal knowledge for all faces and an additional manipulation of the scale of biographical knowledge, while carefully controlling for perceptual experience. In two test sessions—a few days and several months after learning—we investigated the short- and long-term effects of knowledge scale on face processing with event-related brain potentials (ERPs).

Previous research on knowledge scale effects in faces has been scant and has given only indirect clues as to the present question. The largest body of research has concerned differences between familiar and unfamiliar faces (e.g., Bentin & Deouell, 2000a, b; Eimer, 2000; Leveroni et al., 2000; Nessler, Mecklinger, & Penney, 2005; for a review, see Johnston & Edmonds, 2009). Although numerous differential effects of these types of faces have been shown, they may be attributed to differences at several levels of the face processing system. Most importantly, familiar faces differ from unfamiliar faces not only in the availability of biographical knowledge, but also in the presence of stored view-independent structural representations, so-called face recognition units (FRUs; see, e.g., Bruce & Young, 1986). All current models of face cognition assume that access to FRUs precedes access to biographical and name knowledge. Therefore, it is difficult to address the question of biographical knowledge scale effects when perceptual knowledge differs as well, as is the case for familiar versus unfamiliar faces.

There is some evidence for differences between personally known and famous faces from the public domain (Herzmann, Schweinberger, Sommer, & Jentzsch, 2004; Kloth et al., 2006). Although one might assume that more semantic knowledge is available about personally known persons than about celebrities, this may not always be the case and is hard to verify. Thus, faces of personally familiar persons may be seen more often than famous faces, and the perceptual experience may be richer, in that the visual experience with personally familiar faces includes different perspectives, facial expressions, and movements, rather than static portraits.

Some studies have more directly addressed the question of pure biographical knowledge scale effects. Galli, Feurra, and Viggiano (2006), for instance, presented faces during a study phase either without context or with a newspaper headline that described an action presumably committed by the depicted person. During the immediately following test phase, participants discriminated between the studied faces and new faces, with no context being given. Although no performance effects of the presence of context were found during the study phase, the N170 component was smaller for faces studied with context than for those without context. This result indicates that there might be knowledge effects on ERP components that are associated with processes normally considered to be impenetrable by semantic knowledge. Likewise, Heisz and Shedden (2009) demonstrated that the N170 repetition effect (an amplitude decrease with repetitions) was present only for unfamiliar faces, but absent for faces that were familiar (in the sense that participants acquired semantic information about the faces).

In a similar study, Paller and colleagues (Paller, Gonsalves, Grabowecky, Bozic, & Yamada, 2000; see also Paller et al., 2003) observed different effects. The authors compared processing of unfamiliar faces with processing of newly learned faces that either had been associated with a name and a piece of biographical information or had been learned in isolation without any accompanying information. In contrast to the observations by Galli et al. (2006) and Heisz and Shedden (2009), Paller and colleagues found an influence of biographical knowledge on recognition performance in an old/new judgement task. While no N170 effects were reported, the authors demonstrated an enhanced positivity at frontal electrodes between 400 and 500 ms after stimulus presentation to faces associated with biographical information, relative to faces presented without information.

Finally, Kaufmann, Schweinberger, and Burton (2009) compared the processing of newly learned faces with and without biographical information with processing of entirely new faces. In contrast to the studies above, video clips were presented in the learning phase, either in silence or accompanied by a voice providing semantic information. At test, different images of the faces were presented than during learning. Kaufmann et al. observed knowledge effects on face learning between 700 and 900 ms after presentation of a face. Faces learned with biographical knowledge elicited a reduced negativity at left inferior-temporal sites. The authors also found an enhanced recognition rate for faces learned with semantic information. Furthermore, faces in the semantic condition showed an enhanced frontal negativity (in first testing blocks), which is in contrast to Paller et al. (2000), who found an enhanced positivity. No effects on earlier ERP components such as the N170 were reported.

To summarize, the few available studies on knowledge effects in face recognition have revealed rather mixed results. None of the studies above was designed to directly address the question of biographical knowledge scale effects. For example, the comparisons made in all of the above-mentioned studies were between the absence and presence of knowledge, rather than between conditions of more and less knowledge, and the tasks did not require access to semantic information. Faces accompanied by additional information of whatever sort might be processed with more attention and concentration than faces presented in isolation. In addition, all studies tested for knowledge effects directly after learning. In contrast, in the present study we investigated knowledge effects some days after learning, and then again after 6 months had passed, thus capturing long-term effects of knowledge scale (e.g., Axmacher, Haupt, Fernández, Elger, & Fell, 2008; Plihal & Born, 1997; Stickgold, 2005; for reviews, see McGaugh, 2000; Walker, 2009). Because the learned information was refreshed before each test session (see the Method section), our approach allowed long-term as well as short-term effects of knowledge scale to influence ERPs.

Hypotheses about possible knowledge scale effects for faces may be based on evidence available from word recognition (e.g., Pexman, Hargreaves, Edwards, Henry, & Goodyear, 2007; Rabovsky, Sommer, & Abdel Rahman, in press) and object recognition (Abdel Rahman & Sommer, 2008). In the studies by the present authors, precise control over preexperimental knowledge and perceptual experience during knowledge acquisition was obtained by first familiarizing pictures of initially unfamiliar objects and then associating them with some basic minimal knowledge and the objects’ names. Following successful acquisition of this knowledge, additional information was given for half of the objects, while for the other half unrelated information was presented in an analogous procedure. In a separate test session 2–5 days later, three different tests showed comparable effects on the amplitude of an ERP component in the N400 time range, presumably reflecting semantic processing, and on the amplitude of the P100 component, associated with early visual perception.

Because these knowledge-induced effects in ERPs increased when object perception was made more difficult by blurring, it was suggested that knowledge scale affects object perception by reentrant activation from higher-level processes or because it is grounded in perception. In addition, we concluded that these effects occur in an automatic fashion, because they were indistinguishable between quite different tasks, none of which referred to the additional knowledge and—in one case—did not even require access to semantic or name knowledge.

The primary objective of the present study was to extend the findings from word and object recognition (Abdel Rahman & Sommer, 2008; Rabovsky et al., in press) to face recognition. The design of the present experiment closely follows our previous study on knowledge effects on object processing. Portraits of initially unfamiliar faces were first associated with a name and with one piece of biographical information (the person’s nationality). Thereafter, additional biographical knowledge was acquired for half of these faces, while for the others information was presented that did not relate to the person. After several days and again after about 6 months, three different tests were conducted, none of which concerned the additional knowledge. The tasks were chosen to tap different processes involved in face recognition—namely, perception, semantic processing, and name retrieval.

In general, we primarily expected ERP results similar to those in our previous studies with objects and words. That is, we expected an amplitude reduction in the P100 component, which is associated with low-level vision, with enhanced knowledge. Furthermore, we expected an amplitude increase in the N400 range, which is associated with semantic processing.

The N400 is most pronounced around 400 ms after stimulus onset. Its amplitude increases with the demands on semantic processing associated with meaningful stimuli such as words and objects (Kutas & Hillyard, 1980; Kutas, Van Petten, & Kluender, 2006). Although N400-like effects have been reported for stimuli from various domains (e.g., words, objects, and faces) and with similar time courses, the scalp distributions of the effects seem to differ markedly between domains (e.g., Eimer, 2000; Ganis, Kutas, & Sereno, 1996; Holcomb & McPherson, 1994). Therefore, while we expected knowledge scale effects for faces in the N400 time range, the topographies of these effects might differ from those for other stimulus domains, such as objects or words.

The P100 component peaks about 100–130 ms after stimulus onset and reflects processing of visual features in extrastriate cortex (Di Russo, Martínez, Sereno, Pitzalis, & Hillyard, 2001). P100 amplitude is sensitive to spatial attention (Hillyard & Anllo-Vento, 1998) and to other cognitive processes such as facial expression analysis (Meeren, van Heijnsbergen, & de Gelder, 2005).

In addition to these predictions based on previous findings, we considered possible specific effects for faces. In face studies, the P100 is followed by the N170, which peaks between 150 and 200 ms at occipito-temporal regions (e.g., Bentin, Allison, Puce, Perez, & McCarthy, 1996). This component is much smaller or absent for other visual objects and is assumed to reflect the structural analysis of faces. The N170 has often been reported as being unaffected by semantic factors such as the familiarity of the face. However, recent evidence suggests that this component may be affected by familiarity when the faces are presented in immediate succession (for recent reviews, see Eimer, in press; Schweinberger, 2011). For instance, Heisz and Shedden (2009) demonstrated that the N170 repetition effect (an amplitude decrease with repetitions) is absent for familiar faces. Thus, in contrast to our previous studies on knowledge effects in object and word recognition, if knowledge scale affects perceptual processes for faces, this might be reflected in the amplitude of the N170 component. Furthermore, during the interval between the N170 and the N400 in face research (200–300 ms), a repetition effect is often found that is larger for familiar than for unfamiliar faces. This N250 (repetition) effect is taken to reflect perceptual face learning (Schweinberger, 2011) and may therefore serve as a control for undesired differences in perceptual familiarity between the knowledge conditions.

Method

Participants

A group of 28 participants were paid for their participation in the experiment or received partial fulfilment of a curriculum requirement. Four of the participants were excluded because of high error rates in the learning or test sessions. Of the remaining 24 participants, only the 18 who had taken part in both test sessions were included in the analysis (12 females, 6 males; age range 19–30 years [M = 23]). All participants were right-handed (Oldfield score > +80) native German speakers and reported normal or corrected-to-normal visual acuity.

Materials

The target picture set consisted of 40 grayscale photographs of 20 male and 20 female unfamiliar faces, taken from frontal views. The photographs were edited for homogeneity of all features outside of the face and a light blue background color and scaled to 3.5 × 2.7 cm. At a viewing distance of approximately 1 m, they were presented in the middle of a light grey computer screen. All faces were associated with a biographical piece of information (nationality: British or Austrian) and a surname. The names were selected for compatibility with the corresponding nationality information, and thus resembled typical names of British and Austrian persons (e.g., Deaton or Egner, respectively).

For the semantic condition, we composed 20 short stories that contained biographical details about a person. All stories had a standardized format with varying identity-specific information about a fictitious person: (a) occupation, (b) hobbies, (c) martial status, and (d) political attitude (for an exemplary story, see the Appendix). Additionally, a heterogeneous set of 20 unrelated control stories—not containing any person-specific information—was assembled. Among these stories were cooking recipes, weather forecasts, or factual information about, for instance, traces of water on Mars or the relationship between the colors of hens and eggs. The person-specific and unrelated control stories were matched for word numbers and durations when spoken, with mean durations of 35.9 s for the person-specific condition and 36.5 s for the control condition.

In order to test for other possible differences in difficulty or memorability, we pretested the person-related and -unrelated control stories in a separate experiment. Because the stories were presented together with the corresponding faces in the learning phase of the main experiments, systematic variations in attentional demands due to varying degrees of difficulty of the semantic and unrelated control stories might be confounding factors that could complicate the theoretical interpretation of the effects of semantic expertise.

Pretest

In the pretest, 16 participants (M = 23 years, 12 women) were instructed to listen to and memorize the information given in the short stories. The stories were presented three times in random order. To compare the difficulty of the semantic and unrelated control stories, we constructed sentence completion tests in written format that consisted of the biographical and unrelated stories with four pieces of missing information that were essential for a given story. After all stories had been presented, participants were asked to fill in the information missing from the sentences as accurately and concretely as possible. Responses were scored as errors when either no or incorrect information was given. In order be scored as correct, answers had to at least closely resemble the learned information—for example, being close to synonymous. An ANOVA on mean error rates revealed no significant difference between the semantic (M = 9.2%) and the unrelated control stories (M = 10.3%) in terms of the memorability of the information, F(1, 15) = 0.44, p = .53. Thus, the prerequisite of similar degrees of difficulty in the two types of short stories was met.

Procedure

Learning session

The learning session consisted of two parts. In Part 1, participants learned to associate each face with a nationality (British or Austrian) and a surname. This part of the learning session terminated with nationality and name production tasks. In Part 2 of the learning session, the same faces were presented together with the short stories. This two-step procedure was chosen in order to avoid any influences of semantic background information on the of learning faces, names, and nationalities. If the background information were to be presented together with the to-be-learned names and nationalities, we could not determine whether any effects of biographical knowledge were due to differences in the actual retrieval of semantic and name information, or instead due to differences in learning this information.

In Part 1 of the learning session, the acquisition of face–name–nationality associations proceeded as follows. At first, participants were familiarized with the unfamiliar faces. All faces were presented once in random order, and participants carried out a gender classification by means of choice responses. The assignment of gender to response hand was counterbalanced across participants. After this first familiarization, each face was presented together with name and nationality written next to the face. A trial began with a fixation cross presented for 500 ms at the centre of the screen, followed by the combined presentation of face and name. After 2 s, the written nationality information was added to the face and name for 3 s. Then, the face, name, and nationality information was replaced by a fixation cross for 500 ms, after which only the face reappeared for 4 s, requiring the participants to immediately pronounce the corresponding name and nationality (information-followed-by-recall sequence). The total trial duration was 10 s. Each face was presented twice within a miniblock containing four different faces. The combination and order of the miniblocks were counterbalanced across participants, with the restriction that each miniblock included two British and two Austrian men and women, two of whom were assigned in Part 2 to the biographical stories, and the other two to the unrelated control stories.

In a third miniblock with the same four faces, participants were instructed to recall the information immediately upon presentation of the face. In this miniblock, a fixation cross appeared in the middle of the screen for 500 ms, followed by a face without written information. Participants were instructed to produce the name and nationality within the 4-s interval of face presentation. After 4 s, the name and nationality information appeared next to the face for 3 s, providing feedback about the correctness of the response given (recall-followed-by-information sequence). The total trial duration was 7.5 s. The miniblocks were separated by breaks in which all four faces were presented on the screen, together with their name and nationality information. The duration of breaks was controlled by the participants, enabling them to rehearse the face–name–nationality information for the displayed persons.

After this procedure was completed for two different sets of four faces (two miniblocks), all eight faces were presented in a subsequent bigger block in random order with a recall-followed-by-information sequence. This procedure was repeated until all 40 face–name–nationality assignments had been learned. Then, all faces were presented twice in random order with a recall-followed-by-information sequence.

Part 1 of the learning session terminated with a test that entailed three tasks: a buttonpress gender classification, described above, and spoken name and spoken nationality production tasks; each task was administered separately, with the order counterbalanced across participants. All faces were presented and named or classified twice in each task without further information given.

In Part 2 of the learning session, half of the faces were associated with short stories containing person-specific information (additional-knowledge condition), whereas the other half were presented together with unrelated stories (control condition). In each trial, a fixation cross appeared on the screen for 500 ms, followed by the combined presentation of a face and a written name and nationality while a short story was presented auditorily. Each face was presented three times, together with a short story. The faces associated with person-specific information were always assigned to the same biographical story. In contrast, faces for which no person-specific information was given were assigned to different randomly chosen unrelated stories. Thus, while all stories were presented three times, only the stories containing biographical information were repeatedly presented together with the same face, whereas the stories in the unrelated control conditions were presented each time in combination with a different face. This was done as a precaution against unwanted semantic associations between persons and the contents of the unrelated stories. The order of presentation was randomized, and the assignment of each face to person-specific or unrelated control information was counterbalanced across participants. Thus, with respect to the whole experiment, each face was associated in equal parts with semantic and control information. Part 2 of the learning session terminated with a test block identical to the one administered in Part 1.

During the entire learning session (a total duration of about 3 h, including breaks), each face was presented 23 times. The faces were presented 10 times with nationality and name and with biographical or unrelated information, and 13 times without accompanying information.

Test sessions

Electroencephalograms (EEGs) were recorded during two test sessions, a first one 2–5 days after the learning session (M = 2.6, SD = 1.0), and a second after 6–7 months. Each test session started with a questionnaire about the contents of the learning session. First, participants were given the titles of all short stories and instructed to report any details they remembered about the corresponding story. Second, they were asked whether they associated any story with a specific person of the learning phase and to write down the name of that person. Furthermore, participants were given printed forms with the 40 faces and asked to write down the name and nationality information for each person. The completed forms were assessed by the experimenter, and additional information was given if necessary.

The experiment proper consisted of three tasks, a buttonpress gender classification task, along with a spokenFootnote 1 name and a spoken nationality production task, conducted in separate blocks of trials. On each trial, a fixation cross appeared in the middle of the screen for 500 ms, followed by a face, presented for 3 s. Participants were to respond as quickly and accurately as possible. Latencies of buttonpresses and naming responses were measured (the latter with a voice key) while the face was presented. Each face was shown three times in each of the three tasks. Within each block, the faces were presented in random order. The order of the tasks and the assignment of classifications to response hands in the gender classification task were counterbalanced. The second test session, after 6–7 months, was identical to the first test session, with one exception. To refresh participants’ memories prior to the experiment, all faces were shown once together with the name and nationality information, and the related short stories associated with the faces or the unrelated control stories were also presented once.

EEG recording and analysis

A continuous EEG was recorded with sintered Ag/AgCl electrodes at 56 scalp sites of the extended international 10–20 system (Pivik et al., 1993) at a 500-Hz sampling rate (bandpass 0.032–70 Hz). Horizontal and vertical electrooculograms (EOGs) were recorded from the external canthi and from above and below the midpoint of the right eye. All electrodes were initially referenced to the left mastoid. Electrode impedance was kept below 5 kΩ. Offline, the EEG was transformed to an average reference and low-pass filtered at 30 Hz. Eye movement artefacts were removed with a spatio-temporal dipole modeling procedure using the BESA software (Berg & Scherg, 1991). Remaining artefacts were eliminated with a semiautomatic artefact rejection procedure (amplitudes exceeding ±100 μV or changing by more than 50 μV between two successive samples or by 200 μV within single epochs, or eye movements containing baseline drifts). On average, 4.7% of trials were rejected in the naming tasks, 3.8% in the nationality classification task, and 2.9% in the gender classification task. Global field power (Lehmann & Skrandies, 1980) was computed as the overall ERP activity at each time point across the 56 scalp electrodes. Error- and artefact-free EEG data were segmented into epochs of 1,800 ms, starting 100 ms prior to picture onset, with a 100-ms prestimulus baseline interval.

ERP amplitude differences were assessed with repeated measures ANOVAs including the factors test session (2 levels), task (naming, nationality classification, or gender classification), and knowledge scale (additional knowledge vs. unrelated control condition). We focused on time windows of ERP components associated with early, low-level visual perception (P100, from 100 to 150 ms; electrode sites: PO3, PO4, O1, and O2), with higher-level visual perception (N170, from 150 to 200 ms; electrode sites: P7, P8, PO7, and PO8), and with meaning access (N400, from 300 to 500 ms; electrode sites: P3, P4, PO3, and PO4). These regions of interest were based on previous findings about knowledge scale effects for objects. An overall analysis across all electrodes additionally included the factor electrode site (56 levels). Huynh and Feldt (1976) corrections were applied when appropriate.

Results

Performance

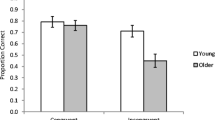

Performance data from the two test sessions are presented in Table 1. Mean reaction times (RTs) for correct responses and mean percentages of error (Err) were submitted to ANOVAs with repeated measures on the factors test session (2–5 days vs. 6–7 months after the learning session), task (spoken name production, spoken nationality production, and gender classification), and knowledge scope (related vs. unrelated knowledge). All ANOVAs were calculated with participants (F 1) and items (F 2) as random variables. For RTs, we found main effects of test session, F 1(1, 17) = 24.3, MSE = 17,138, p < .001; F 2(1, 39) = 134.1, MSE = 6,641, p < .001, and task, F 1(2, 34) = 201.1, MSE = 49,140, p < .001; F 2(2, 78) = 1,009.0, MSE = 32,452, p < .001, ε = .66,Footnote 2 reflecting decreasing latencies over the tasks, from name retrieval, to semantic fact retrieval, to gender classification. In addition, there was an interaction of test session and task, F 1(2, 34) = 22.8, MSE = 8,869, p < .001; F 2(2, 78) = 87.3, MSE = 5,000, p < .001, ε = .82. This interaction reflects slower name and nationality retrieval but faster gender classification in the second relative to the first test session. The main effect of knowledge scope did not reach significance, Fs < 1.5, and there were no further interactions between the experimental factors, all Fs < 2.3.

For error rates, there was a main effect of task, F 1(2, 34) = 20.9, MSE = 209, p < .001, ε = .63; F 2(2, 78) = 73.0, MSE = 120, p < .001, ε = .68. The effect of test session reached significance in the items analysis, F 2(1, 39) = 15.2, MSE = 41, p < .001, but not in the participants analysis, F 1(1, 17) = 1.8, MSE = 149, p = .18. Similarly, there was a main effect of knowledge scope in the items analysis, F 2(1, 39) = 5.1, MSE = 20, p < .05, reflecting fewer errors when more biographical information was provided, but no significant effect in the participants analysis, F 1(1, 17) = 1.1, MSE = 44, p = .30. No other interaction reached significance, Fs < 2.3.

To summarize, knowledge scope did not affect response latencies. It did, however, yield a trend towards less error-prone task performance with increasing biographical background knowledge.

Event-related potentials

Figure 1 depicts the global field power of the ERPs for correct responses in the three tasks, collapsed across test sessions, and the topographical distributions of knowledge scale effects; Figure 2 shows ERPs at the posterior electrodes separately for the two test sessions. The figures show two effects of knowledge scale. First, knowledge effects can be seen in the P100 time window, with smaller amplitudes in the additional-knowledge relative to the unrelated control condition. The ANOVA of mean ERP amplitudes in this time window (100–150 ms) at electrode sites PO3, PO4, O1, and O2 revealed a main effect of knowledge condition, F(1, 17) = 5.5, MSE = 3,286, p < .05. Except for an interaction between test session and electrode site, F(2, 34) = 7.5, MSE = 2,986, p < .01, no other main effects or interactions approached significance, Fs < 1.8. Thus, knowledge scale effects appear to affect the amplitude of the P100 across all tasks. Despite nonsignificant interactions between test session and knowledge scale, the effect size estimates (partial eta squared) suggest that the effects decreased from Test Session 1 (η 2p = .32) to Test Session 2 (η 2p = .04); see also Fig. 2. The more conservative analysis across all 56 electrodes (summarized in Table 2) yielded only a trend for the main effect of knowledge condition, suggesting that knowledge effects are restricted to posterior electrodes (where the P100 is most prominent) and are not strong enough to persist across all 56 electrodes.

(Top panels) Global field power of grand mean event-related potential waveforms (n = 18) for the three different tasks, collapsed across Test Sessions 1 and 2: (A) naming task, (B) nationality classification, and (C) gender classification. (Bottom panels) Scalp distributions of knowledge scale effects (biographical knowledge – unrelated control condition) for (left) the P100 time window and (right) the N400 time window

It is conceivable that the amplitude differences between the experimental conditions during the P100 might be due to different numbers of included trials in which the participant blinked or made an eye movement at the moment the stimulus was delivered. Although electroocular artefacts were corrected and the stimulus could still be seen due to the long presentation time, the P100 might not appear at the standard latency because visual input would occur only later. In order to check this possibility, we counted the instances in which a blink or eye movement took place in the interval from 200 ms before to 150 ms after stimulus onset. This was the case in 0.6% of the trials from the in-depth knowledge condition, and 0.3% of the trials from the control condition, a difference that was not significant according to a Wilcoxon signed rank test, ps > .07. Moreover, the effect of eye-related activity did not explain the condition differences. The mean amplitude of the condition effect at electrodes PO3, PO4, O1, and O2 after eliminating the few trials with eye activity in the relevant interval across all tasks and test sessions was even slightly bigger than in the original analysis (M = 0.54 vs. 0.33 μV).

Knowledge scale effects could also be seen in the N400 time window, with an increased posterior negativity in the additional-knowledge relative to the control condition (see Figs. 1 and 2). An ANOVA of mean ERP amplitudes in this time window (300–500 ms) at electrode sites P3, P4, PO3, and PO4 revealed main effects of knowledge scale, F(1, 17) = 10.1, MSE = 2,547, p < .01, and electrode site, F(3, 51) = 4.93, MSE = 17,201, p < .05, as well as an interaction between electrode site and knowledge condition, F(3, 51) = 3.3, MSE = 224, p = .053. This interaction reflects a slight left lateralization of the knowledge scale effects [F(1, 17) = 13.4, MSE = 1,518, p < .01, for electrodes P3 and PO3, and F(1, 17) = 4.8, MSE = 1,491, p < .05, for electrodes P4 and PO4], albeit the interaction of hemisphere and knowledge condition did not reach significance, F(1, 17) = 3.6, MSE = 463, p = .074. The main effect of knowledge scale was confirmed in the overall analysis including all 56 electrode sites (Table 2). In this analysis, no interaction of knowledge scale with the other factors was found. There was also a trend in an analysis with the midline electrodes Cz, Pz, and Oz, F(1, 17) = 4.2; p = .056.

As can be seen in Figs. 1 and 2, there were no effects of knowledge scale on the N170 amplitude, as confirmed in the analysis across all electrodes (cf. Table 2) and in an analysis including electrode sites P7, P8, PO7, and PO8 (F < 1.5), where the N170 is most pronounced.

Concerning the N250, we tested for knowledge scale effects at the typical electrodes (TP9, TP10, P7, P8, PO9, and PO10) for this component (Schweinberger, 2011). There were no significant effects of knowledge scale between 200 and 250 ms and between 250 and 300 ms (Fs ≤ 1).

Discussion

In this study, we tracked the long-term effects of the scale of biographical knowledge on face recognition. Knowledge scale effects were found in two distinct time windows and across three tasks chosen to tap into the processes of perception (gender classification), semantic processing (nationality classification), and name retrieval. First, knowledge affected ERPs in the latency range of the N400 component, often related to knowledge access. This effect was found to be stable across two test sessions conducted a few days and 6–7 months after learning. Second, knowledge affected ERPs in the latency range of the P100 component, which reflects low-level visual analyses. P100 amplitude was smaller in the biographical knowledge relative to the unrelated control condition. This effect was more pronounced in the first test session, shortly after learning, and was strongly reduced in the second test session, a few months later. These results imply that stored biographical knowledge not only affects access to semantic information, but also has an influence on early stages of visual face perception.

The present study is the first to report knowledge effects for faces with an astonishingly early temporal and functional locus—between 100 and 150 ms after stimulus onset—associated with low-level perception. Knowledge effects reported in previous studies of face recognition have been diverse but always later in time (Galli et al., 2006; Kaufmann et al., 2009; Paller et al., 2000). At present, one can only speculate on the reasons for the heterogeneity of findings across studies. The discrepancy between our results and the findings of others might be due to substantial design differences. Most importantly, the present study involved a refresher immediately before testing, but also involved long-term effects of additional knowledge, tested a few days and several months after the additional knowledge had been acquired. In contrast, the other studies investigated knowledge effects only directly after the information had been learned. However, subtle effects in the P100 component might not be present directly after learning. Thus, we suggest that memory consolidation may exert a critical influence on the specific characteristics and time course of knowledge scale effects. Because declarative memory representations build up gradually, changing over time from initially labile to more stable representations (e.g., Miller & Matzel, 2000), such potential differences might explain the divergent results. That our knowledge effects were diminished after several months does not necessarily speak against the idea of memory consolidation, because forgetting may have counteracted longer-term consolidation. Please note, however, that the reactivation of consolidated memories during retrieval may cause them to return into a more labile state similar to that during initial encoding (see, e.g., Nader, Schafe, & LeDoux, 2000). Because we checked the learned information before Test Session 1 and refreshed our participants’ memories before Test Session 2, the issue of memory consolidation cannot be resolved with the present study and should be tested more directly in future research.

Furthermore, a crucial difference between the present and all previous studies has consisted of our control of visual expertise and attentional factors concerning the faces prior to and during additional knowledge acquisition. Faces both with and without additional knowledge were familiarized in precisely the same way: In the first part of the learning session, all faces were familiarized and the task-relevant knowledge was acquired in identical fashion. Only after this had been accomplished was differential knowledge introduced, in the second part of the learning session. By this two-step learning procedure, we ascertained that the visual experience was identical for all of the faces. The type of information later associated with the faces could not have had any influence on the initial familiarization with the faces and on the learning of the task-relevant information.

A possible confound might be the differential homogeneities of the stories containing biographical knowledge, with their standardized format, and the more variable set of unrelated control stories. We reasoned that a very homogeneous set of control stories—containing, for instance, only cooking recipes—might have introduced unwanted differences in vigilance, attention, and so forth because this condition might have been of less interest for most participants. Therefore, we decided to vary the information contents of the unrelated control stories. However, in two similar studies on object learning, we had used homogeneous sets of cooking recipes and found very similar patterns of results in the P100 and N400 time windows (Abdel Rahman & Sommer, 2008; Rabovsky et al., in press). Therefore, we are confident that differential homogeneities of the stories in the related and unrelated control conditions could not account for the P100 and N400 modulations found here.

One might also wonder whether P100 amplitudes could have been influenced by the fact that the same biographical information was presented repeatedly for a given face in the biographical knowledge condition, whereas the unrelated control stories were different for each presentation of a given face in the control condition. As mentioned above, this was done to prevent unwanted associations between the contents of the unrelated stories and the faces. Although these different modes of presentation represent a possible confound with the knowledge conditions, we consider it highly unlikely that this variation during the learning phase might have impacted the P100 recorded in the test phase a few days later when great care had been taken to control everything apart from the previous learning experience. However, even if such a trial-by-trial variation in the learning phase could have had an effect in the test phase, this would have to be considered a type of high-level effect similar to the knowledge scale effects in question.

Thus, the present findings specifically relate to differences in the scale of knowledge, not to confounding influences of perceptual or attentional factors or of visual expertise. Differences between the present report and earlier findings on knowledge effects might relate to any of these factors.

We should also point out that we intended specifically to investigate the effects of knowledge scale, whereas earlier studies focused on the effects of the absence/presence of any knowledge. It is our informal impression from the many learning studies with unfamiliar faces and objects that, in the absence of any information about the stimuli, participants tend to make up their own stories on the basis of, for example, associations with similar persons or objects that they know. Here, the unrelated stories and the minimal task-relevant information aimed to prevent such uncontrolled self-concocted knowledge sources. The present results extend the effects of knowledge scale found in object recognition (Abdel Rahman & Sommer, 2008). Using a learning design analogous to the one employed in the present study, we found effects of object-related knowledge in the N400 and P100 time windows (cf. Fig. 3). Although in the present study the knowledge-induced amplitude modulations in the two time windows were notably smaller than the modulations found for objects, the pattern of results was very similar. Thus, when perceptual and attentional factors during learning are controlled for, similar knowledge scale effects emerge in the two domains. The similarities concerned not only the temporal dynamics of the early and late effects (P100 and N400 time windows), but also the scalp topographies of the knowledge scale effects in both time windows, both effects being characterized by posterior negativities.

The reader may ask: If the face processing system does not know which person is presented at the P100 stage, how can knowledge about a specific person influence that stage? Early knowledge effects for objects have been interpreted by Abdel Rahman and Sommer (2008) as being in line with suggestions of reentrant activation from higher-order to perceptual systems. Thus, conceptual knowledge may exert a top-down influence on early perception, facilitating feature analysis by means of reentrant activation from higher-level semantic to sensory cortical areas (e.g., Bar et al., 2006; Barrett & Bar, 2009). Extending this line of argument to the present findings, similar reentrant activation of input structures may also hold true for faces or may reflect a restructuring of the neural networks in the perceptual system.

The knowledge-induced modulation of P100 amplitude suggests an early locus during low-level visual analyses that might not be specific to face processing. This interpretation is further confirmed by the similarity of the effects found here to analogous, albeit stronger, P100 effects in object, and even in word, recognition (Abdel Rahman & Sommer, 2008; Rabovsky et al., in press; see Fig. 3 for a direct comparison of knowledge effects on face and object processing). The similarity of P100 effects across stimulus domains suggests a common underlying mechanism. Thus, we suggest that knowledge affects visual processes at rather early stages. A possible cortical mechanism in the lateral intraparietal area for early effects on the basis of learned associations has recently been proposed by Peck, Jangraw, Suzuki, Efem, and Gottlieb (2009). Explanations of the present findings in terms of early reentrant effects are at variance with traditional notions of slow, serial visual processing. They are in line, however, with several current ideas of fast visual brain systems interacting with higher-order systems (e.g., Bar, 2005; Barrett & Bar, 2009; Grill-Spector & Kanwisher, 2005).

Interestingly, the systems that have been closely linked to structural processing of faces, as reflected in the N170 component (Bentin & Deouell, 2000b; Eimer, 2000; Rossion et al., 1999; Schweinberger, Pickering, Burton, & Kaufmann, 2002), appear to be exempt from such top-down modulations, at least in the present study (but see Heisz & Shedden, 2009; Kloth et al., 2006). Possibly the structural encoding of faces is not a process that is reactivated when knowledge exerts its top-down or attentional effects. This is in line with the findings that our knowledge effects are domain general, whereas the N170 is seen as a face-specific process that has rarely been reported to be influenced by knowledge-related variables. However, another reason for the absence of knowledge effects in the N170 may be that we did not present identical stimuli twice. As discussed in the introduction, it has recently been shown (see, e.g., Heisz & Shedden, 2009) that such a repetition effect in the N170 is modulated by face familiarity in a learning paradigm. It remains to be explored why such knowledge effects in the N170 depend on the direct repetition of faces. When faces are not repeated, as in the present study, knowledge does not affect the N170.

Late knowledge effects, termed here as effects in the N400 time window, have been reported in several studies with faces (e.g., Kaufmann et al., 2009; Paller et al., 2000) but also with other stimulus materials (Abdel Rahman & Sommer, 2008; Engst, Martín-Loeches, & Sommer, 2006). However, the precise timing and scalp distributions of these late effects are diverse and often differ from the classic N400 component reported in response to semantic violations in linguistic contexts (Kutas & Hillyard, 1980), but also for famous as compared to unfamiliar faces (Eimer, 2000; Schweinberger, Pfütze, & Sommer, 1995). However, differences in scalp distributions of the N400-like components associated with the processing of stimuli from different domains are in line with many previous reports (e.g., Eimer, 2000; Ganis, Kutas, & Sereno, 1996; Holcomb & McPherson, 1994) and are consistent with ideas about embodied cognition (Barsalou, 1999), suggesting that memory representations are localized in brain areas responsible for the perceptual and motor processing of these objects and events.

Interestingly, there were no significant effects in the time range of the N250 component (around 200–300 ms). Because this component is assumed to reflect perceptual face learning (e.g., Tanaka, Curran, Porterfield, & Collins, 2006) but not semantic learning (e.g., Kaufmann et al., 2009), it might serve as a control against undesired perceptual differences between conditions. The insensitivity of the N250 to knowledge scale thus provides further support that our manipulation was semantic in nature and did not involve perceptual factors. Furthermore, it ties well with the idea that the effects observed here are not specific to faces, but generalize across different types of objects.

It is less clear how our findings relate to episodic memory retrieval effects. In principle, we cannot exclude the idea that episodic memory contributed to the present semantic knowledge effects. However, we should point out here that this holds not only for the present report, but also for other studies manipulating the amount of semantic knowledge. Furthermore, the typical episodic-memory effects in ERPs consist of positive-going left parietal old/new effects that are enhanced when elicited by test items associated with full relative to partial recollection (e.g., Vilberg, Moosavi, & Rugg, 2006). Such effects seem to differ markedly from the effects observed here. Thus, further research will be required for a clear distinction between knowledge scale and episodic-memory effects.

Performance in the present experiment was not significantly affected by knowledge scale. This is similar to our previous findings for objects that were easy to perceive; however, performance effects of knowledge scale did appear when the objects were blurred, and thus more difficult to perceive (Abdel Rahman & Sommer, 2008). Therefore, we presume that with the present design performance effects might also appear if perception were to be more challenging, and thus increase the sensitivity of the performance measures.

An interesting topic for further research concerns specific types of knowledge effects. In the present study, participants learned typical biographical information, such as occupation. However, there might not be anything special to such biographical information relative to other kinds that might be associated with a person (see, e.g., Abdel Rahman, in press, for effects of affective biographical information on face recognition that develop slightly later in time). Thus, fixed associations with atypical pieces of information (e.g., his or her favourite cooking recipe) might yield the same kinds of effects obtained here. In conclusion, this article has reported effects of biographical knowledge scale not only on comparatively late processes in face recognition classically associated with semantic memory retrieval, but also on early and low-level perceptual analyses, suggesting top-down influences of semantic on perceptual stages.

Notes

Cumulating recent evidence has demonstrated the feasibility of combining overt articulation with the ERP technique. In particular, the time interval before articulation (which is the time interval of interest here) is not significantly affected by speech artefacts (e.g., Abdel Rahman & Sommer, 2008; Aristei, Melinger, & Abdel Rahman, 2011; Costa, Strijkers, Martin, & Thierry, 2009; Hirschfeld, Jansma, Bölte, & Zwitserlood, 2008).

When the sphericity assumption was violated, the Huyhn–Feldt ε value for correction of the degrees of freedom is reported, together with the uncorrected degrees of freedom and the corrected significance levels.

References

Abdel Rahman, R. (in press). Facing good and evil: Early brain signatures of affective biographical knowledge in face recognition. Emotion. doi:10.1037/a0024717

Abdel Rahman, R., & Sommer, W. (2008). Seeing what we know and understand: How knowledge shapes perception. Psychonomic Bulletin & Review, 15, 1055–1063. doi:10.3758/PBR.15.6.1055

Aristei, S., Melinger, A., & Abdel Rahman, R. (2011). Electrophysiological chronometry of semantic context effects in language production. Journal of Cognitive Neuroscience, 23, 1567–1586.

Axmacher, N., Haupt, S., Fernández, G., Elger, C. E., & Fell, J. (2008). The role of sleep in declarative memory consolidation—Direct evidence by intracranial EEG. Cerebral Cortex, 18, 500–507.

Bar, M. (2005). Top-down facilitation of visual object recognition. In L. Itti, G. Rees, & J. K. Tsotsos (Eds.), Neurobiology of attention (pp. 140–145). Boston: Academic Press/Elsevier.

Bar, M., Kassam, K. S., Ghuman, A. S., Boshyan, J., Schmidt, A. M., Dale, A. M., . . . Halgren, E. (2006). Top-down facilitation of visual recognition. Proceedings of the National Academy of Sciences, 103, 449–454. doi:10.1073/pnas.0507062103

Barrett, L. F., & Bar, M. (2009). See it with feeling: Affective predictions during object perception. Philosophical Transactions of the Royal Society B, 364, 1325–1334. doi:10.1098/rstb.2008.0312

Barsalou, L. W. (1999). Perceptual symbol systems. The Behavioral and Brain Sciences, 22, 577–660. doi:10.1017/S0140525X99002149

Bentin, S., Allison, T., Puce, A., Perez, E., & McCarthy, G. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience, 8, 551–565.

Bentin, S., & Deouell, L. Y. (2000a). Face detection and face identification: ERP evidence for separate mechanisms. Cognitive Neuropsychology, 17, 35–55.

Bentin, S., & Deouell, L. Y. (2000b). Structural encoding and identification in face processing: ERP evidence for separate mechanisms. Cognitive Neuropsychology, 17, 35–54.

Berg, P., & Scherg, M. (1991). Dipole models of eye movements and blinks. Electroencephalography and Clinical Neurophysiology, 79, 36–44. doi:10.1016/0013-4694(91)90154-V

Bruce, V., & Young, A. (1986). Understanding face recognition. British Journal of Psychology, 77, 305–327.

Costa, A., Strijkers, K., Martin, C., & Thierry, G. (2009). The time-course of word retrieval revealed by event-related brain potentials during overt speech. Proceedings of the National Academy of Science, 106, 21442–21446.

Di Russo, F., Martínez, A., Sereno, M. I., Pitzalis, S., & Hillyard, S. A. (2001). Cortical sources of the early components of the visual evoked potential. Human Brain Mapping, 15, 95–111.

Eimer, M. (2000). Event-related brain potentials distinguish processing stages involved in face perception and recognition. Clinical Neurophysiology, 11, 694–705.

Eimer, M. (in press). The face-sensitive N170 component of the event-related brain potential. In A. J. Calder, G. Rhodes, M. H. Johnson, & J. V. Haxby (Eds.), Oxford handbook of face perception. Oxford: Oxford University Press

Engst, F. M., Martín-Loeches, M., & Sommer, W. (2006). Memory systems for structural and semantic knowledge of faces and buildings. Brain Research, 1124, 70–80.

Galli, G., Feurra, M., & Viggiano, M. P. (2006). “Did you see him in the newspaper?” Electrophysiological correlates of context and valence in face processing. Brain Research, 1119, 190–202.

Ganis, G., Kutas, M., & Sereno, M. I. (1996). The search for “common sense”: An electrophysiological study of the comprehension of words and pictures in reading. Journal of Cognitive Neuroscience, 8, 89–106.

Grill-Spector, K., & Kanwisher, N. (2005). Visual recognition—As soon as you know it is there, you know what it is. Psychological Science, 16, 152–160.

Heisz, J. J., & Shedden, J. M. (2009). Semantic learning modifies perceptual face processing. Journal of Cognitive Neuroscience, 21, 1127–1134.

Herzmann, G., Schweinberger, S. R., Sommer, W., & Jentzsch, I. (2004). What’s special about personally familiar faces? A multimodal approach. Psychophysiology, 41, 688–701. doi:10.1111/j.1469-8986.2004.00196.x

Hillyard, S. A., & Anllo-Vento, L. (1998). Event-related brain potentials in the study of visual selective attention. Proceedings of the National Academy of Sciences, 95, 781–787.

Hirschfeld, G., Jansma, B., Bölte, J., & Zwitserlood, P. (2008). Interference and facilitation in overt speech production investigated with event-related potentials. Neuroreport, 19, 1227–1230. doi:10.1097/WNR.0b013e328309ecd1

Holcomb, P. J., & McPherson, W. B. (1994). Event-related brain potentials reflect semantic priming in an object decision task. Brain and Cognition, 24, 259–276.

Huynh, H., & Feldt, L. S. (1976). Estimation of the box correction for degrees of freedom from sample data in the randomised block and split-plot design. Journal of Educational Statistics, 1, 69–82.

Johnston, R. A., & Edmonds, A. J. (2009). Familiar and unfamiliar face recognition: A review. Memory, 17, 577–596.

Kaufmann, J. M., Schweinberger, S. R., & Burton, A. M. (2009). N250 ERP correlates of the acquisition of face representations across different images. Journal of Cognitive Neuroscience.

Kloth, N., Dobel, C., Schweinberger, S. R., Zwitserlood, P., Bölte, J., & Junghöfer, M. (2006). Effects of personal familiarity on early neuromagnetic correlates of face perception. European Journal of Neuroscience, 24, 3317–3321.

Kutas, M., & Hillyard, S. A. (1980). Reading senseless sentences: Brain potentials reflect semantic incongruity. Science, 207, 203–205. doi:10.1126/science.7350657

Kutas, M., Van Petten, C., & Kluender, R. (2006). Psycholinguistics electrified II: 1994–2005. In M. Traxler & M. A. Gernsbacher (Eds.), Handbook of psycholinguistics (2nd ed., pp. 659–724). New York: Elsevier.

Lehmann, D., & Skrandies, W. (1980). Reference-free identification of components of checkerboard-evoked multichannel potential fields. Electroencephalography and Clinical Neurophysiology, 48, 609–621.

Leveroni, C. L., Seidenberg, M., Mayer, A. R., Mead, L. A., Binder, J. R., & Rao, S. M. (2000). Neural systems underlying the recognition of familiar and newly learned faces. Journal of Neuroscience, 20, 878–886.

McGaugh, J. L. (2000). Memory: A century of consolidation. Science, 287, 248–251. doi:10.1126/science.287.5451.248

Meeren, H. K. M., van Heijnsbergen, C. C. R. J., & de Gelder, B. (2005). Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences, 102, 16518–16523.

Miller, R. R., & Matzel, L. D. (2000). Reconsolidation: Memory involves far more than “consolidation. Nature Reviews Neuroscience, 1, 214–216. doi:10.1038/35044578

Nader, K., Schafe, G. E., & LeDoux, J. E. (2000). Reconsolidation: The labile nature of consolidation theory. Nature Reviews Neuroscience, 1, 216–219. doi:10.1038/35044580

Nessler, D., Mecklinger, A., & Penney, T. B. (2005). Perceptual fluency, semantic familiarity and recognition-related familiarity: An electrophysiological exploration. Cognitive Brain Research, 22, 265–288.

Paller, K. A., Ranganath, C., Gonsalves, B., LaBar, K. S., Parrish, T. B., Gitelman, D. R., . . . Reber, P. J. (2003). Neural correlates of person recognition. Learning and Memory, 10, 253–260. doi:10.1101/lm.57403

Paller, K. A., Gonsalves, B., Grabowecky, M., Bozic, V. S., & Yamada, S. (2000). Electrophysiological correlates of recollecting faces of known and unknown individuals. NeuroImage, 11, 98–110.

Peck, C. J., Jangraw, D. C., Suzuki, M., Efem, R., & Gottlieb, J. (2009). Reward modulates attention independently of action value in posterior parietal cortex. Journal of Neuroscience, 29, 11182–11191.

Pexman, P. M., Hargreaves, I. S., Edwards, J. D., Henry, L. C., & Goodyear, B. G. (2007). The neural consequences of semantic richness: When more comes to mind, less activation is observed. Psychological Science, 18, 401–406.

Pivik, R. T., Broughton, R. J., Coppola, R., Davidson, R. J., Fox, N., & Nuwer, M. R. (1993). Guidelines for the recording and quantitative analysis of electroencephalographic activity in research contexts. Psychophysiology, 30, 547–558.

Plihal, W., & Born, J. (1997). Effects of early and late nocturnal sleep on declarative and procedural memory. Journal of Cognitive Neuroscience, 9, 534–547.

Rabovsky, M., Sommer, W., & Abdel Rahman, R. (in press). Depth of conceptual knowledge modulates visual processes during word reading. Journal of Cognitive Neuroscience.

Stickgold, R. (2005). Sleep-dependent memory consolidation. Nature, 437, 1272–1278. doi:10.1038/nature04286

Rossion, B., Campanella, S., Gomez, C. M., Delinte, A., Debatisse, D., Liard, L., . . . Guerit, J.-M. (1999). Task modulation of brain activity related to familiar and unfamiliar face processing: An ERP study. Clinical Neurophysiology, 110, 449–462. doi:10.1016/S1388-2457(98)00037-6

Schweinberger, S. R. (2011). Neurophysiological correlates of face recognition. In A. J. Calder, G. Rhodes, M. H. Johnson, & J. V. Haxby (Eds.), The handbook of face perception (pp. 345–366). Oxford: Oxford University Press.

Schweinberger, S. R., Pickering, E. C., Burton, A. M., & Kaufmann, J. M. (2002). Human brain potential correlates of repetition priming in face and name recognition. Neuropsychologia, 40, 2057–2073.

Schweinberger, S. R., Pfütze, E.-M., & Sommer, W. (1995). Repetition priming and associative priming of face recognition. Evidence from event-related potentials. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21, 722–736.

Tanaka, J. W., Curran, T., Porterfield, A. L., & Collins, D. (2006). Activation of preexisting and acquired face representations: The N250 event-related potential as an index of face familiarity. Journal of Cognitive Neuroscience, 18, 1488–1497. doi:10.1162/jocn.2006.18.9.1488

Vilberg, K. L., Moosavi, R. F., & Rugg, M. D. (2006). The relationship between electrophysiological correlates of recollection and amount of information retrieved. Brain Research, 1122, 161–170. doi:10.1016/j.brainres.2006.09.023

Walker, M. P. (2009). The role of sleep in cognition and emotion. Annals of the New York Academy of Sciences, 1156, 168–197.

Author note

This work was supported by Grants AB 277–1, -3, and −5 from the German Research Foundation to R.A.R. We thank Friederike Engst, Julia Junker, Jule Schmidt, Kerstin Unger, and David Wisniewski for assistance with data collection and analysis and with the preparation of the experiments.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Example stories containing biographical knowledge and unrelated information, as well as English translations. All biographical stories had a standardized format, with varying biographical information about the occupation, hobbies, marital status/family, and political attitude of a fictitious person.

Biographical story

Dieser Mann ist Landwirt und auf Rinderzucht spezialisiert. Auf seinem Hof hält er sich auch Hühner nach Normen für Bio-Produkte, da er meint, auf einem noch jungen Markt Fuß fassen zu können. Um dies auch seinen Kunden gegenüber ausstrahlen zu können, engagiert er sich als aktives Mitglied in einer Partei, deren Programm sich überwiegend mit dem Umweltschutz befasst. In seiner freien Zeit sammelt er alte Münzen und Briefmarken, und durch Auktionsbesuche versucht er seine Sammlungen ständig zu erweitern. Er lebt mit seiner Frau und den fünf Kindern auf seinem großen Hof und ist mit dem Leben rundum zufrieden.

“This man is a farmer and specialized in cattle-breeding. On his farm he also keeps chickens according to the norms for organic farming, planning to get a foot in this developing market. To display his ecological awareness to his customers also, he is involved as an active member in a party whose program is mostly concerned with environmentalism. In his spare time, he collects antique coins and stamps, constantly trying to expand his collections. He lives with his wife and their five children on his huge farm and is thoroughly content with his life.”

Unrelated control story

Entengrütze aufs Butterbrötchen ist eine neue Frühstücksidee. Zurzeit wird getestet, wie Entengrütze als neues Gemüse beim Verbraucher ankommt. Es ist eigentlich erstaunlich, dass man erst jetzt diese Idee aufkommt, denn bei den so genannten Wasserlinsen ist die Nährstoffkombination viel günstiger als bei allen anderen bekannten Nutzpflanzen. Auf Seen und Teichen rund um den Erdball wachsen die anspruchslosen kleinen Schwimmpflanzen oft in dichten Teppichen. Enten und viele andere Wasservögel sind schon lange auf den Geschmack gekommen. Sie füttern sogar ihre Küken mit den gehaltvollen Wasserlinsen.

“Duckweed on a sandwich is a new gimmick for breakfast. The consumer acceptance for duckweed as a new vegetable is currently being tested. It is somewhat surprising that it has not yet occurred to anybody, because the so-called water lentil contains better nutrients than any other known useful agricultural crop. Across the world, the small and undemanding aquatic plants cover lakes and ponds. Ducks and other waterfowl have already acquired a taste for it, and feed their chicks with water lentils, rich in substance.”

Rights and permissions

About this article

Cite this article

Abdel Rahman, R., Sommer, W. Knowledge scale effects in face recognition: An electrophysiological investigation. Cogn Affect Behav Neurosci 12, 161–174 (2012). https://doi.org/10.3758/s13415-011-0063-9

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13415-011-0063-9